- Generative AI Artworks

-

This page showcases a collection of experimental artworks created using generative AI models. These works were generated by combining latent diffusion models (LDMs) such as SD1.5, SDXL, and FLUX.1 Dev with large language models (LLMs) and vision-language models (VLMs). The primary aim is to explore how prompts interact with AI models. Notably, most portfolios based on LDMs also employed additional techniques such as Inpaint and ControlNet to improve overall image quality, rather than relying solely on a single model.

I believe that in the near future, designers must embrace generative AI as a new design tool and learn how to use it effectively. The image introduced below is an example of a generative artwork I created. For some of the images, the prompts used during their creation are also provided.

-

VLM, LLM

Florence-2

ChatGPT

-

LDMs

Stable Diffusion 1.5

ControlNet, IC-Light, IPAdapter, ReActor..SDXL

+ ModelSamplingContinuousEDM..FLUX.1 & Krea & Kontext

+ ControlNet Union, PuLID, Fill & Redux..

-

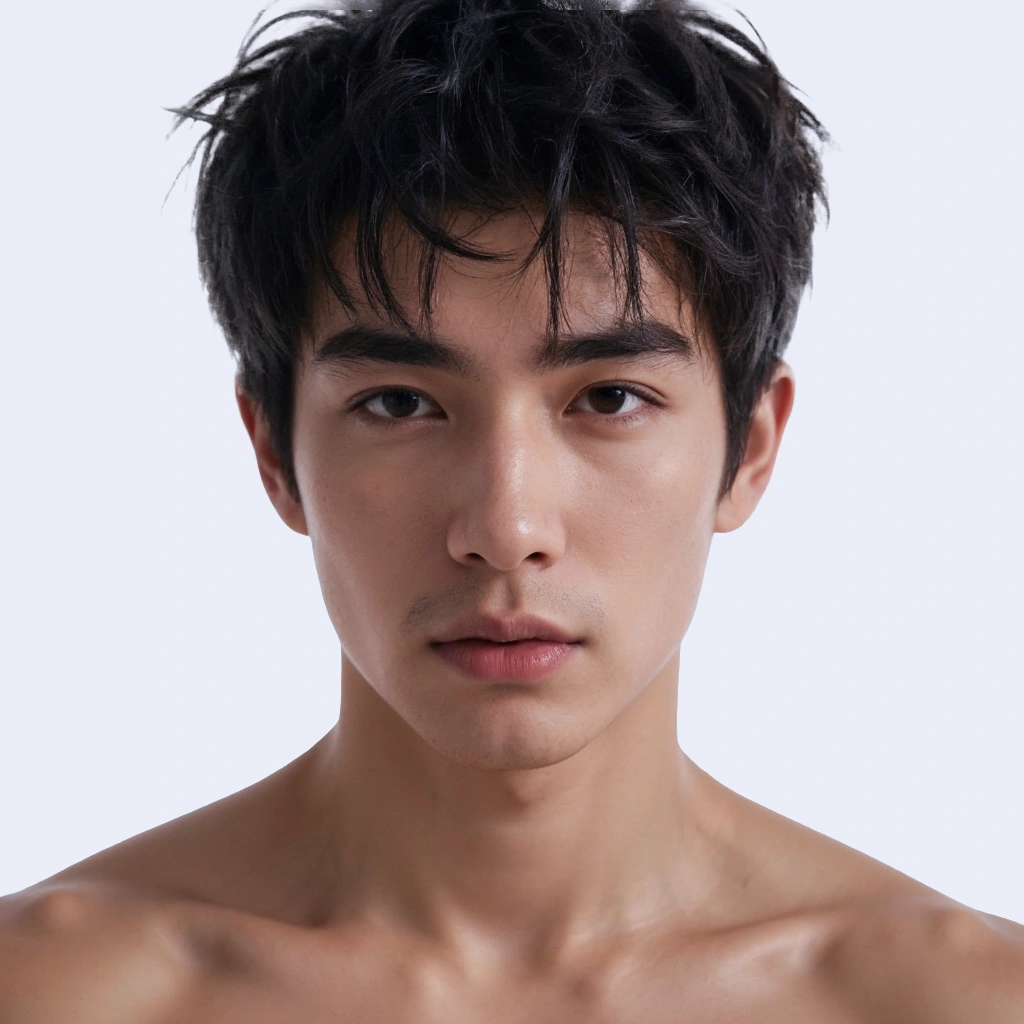

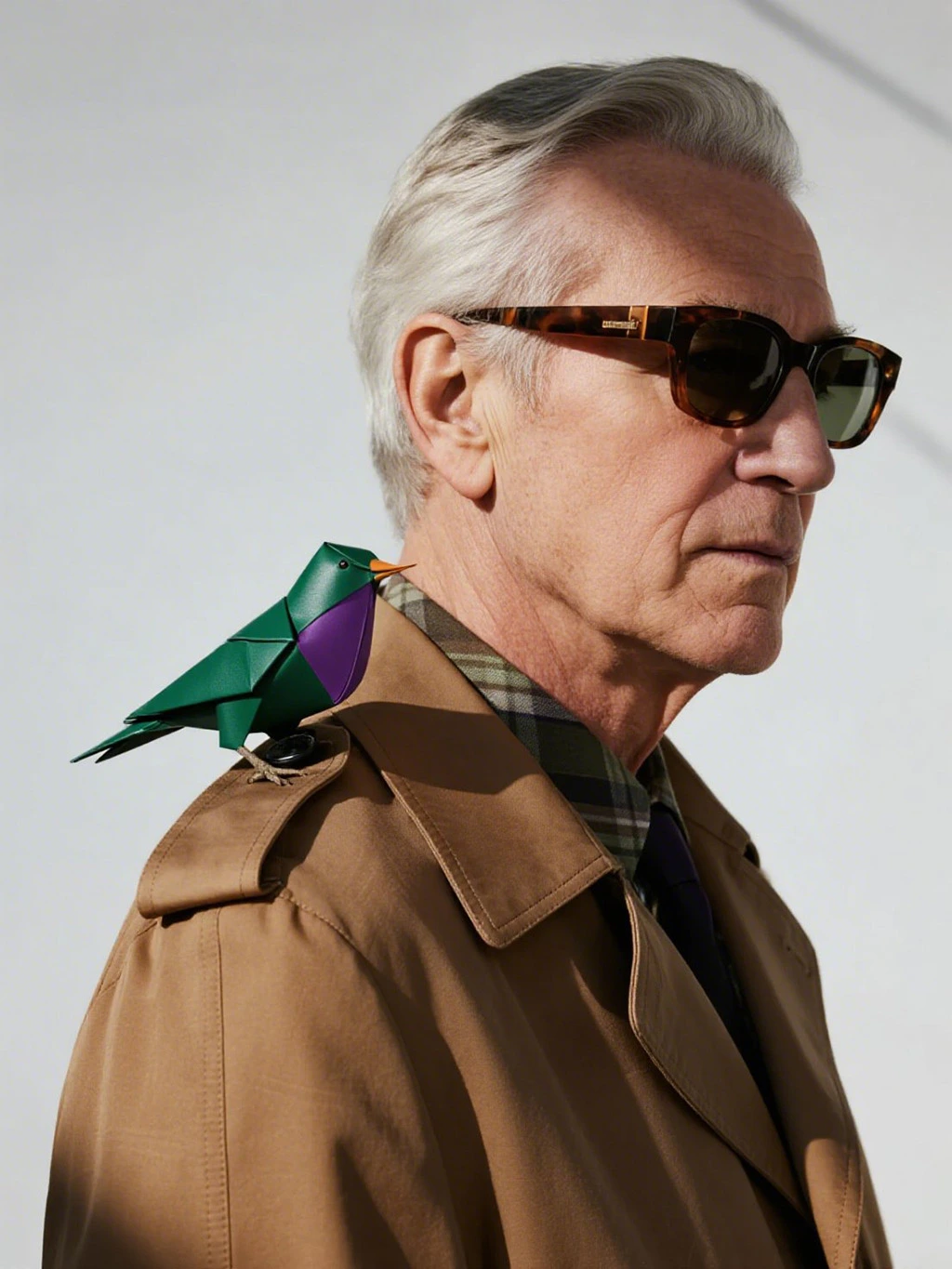

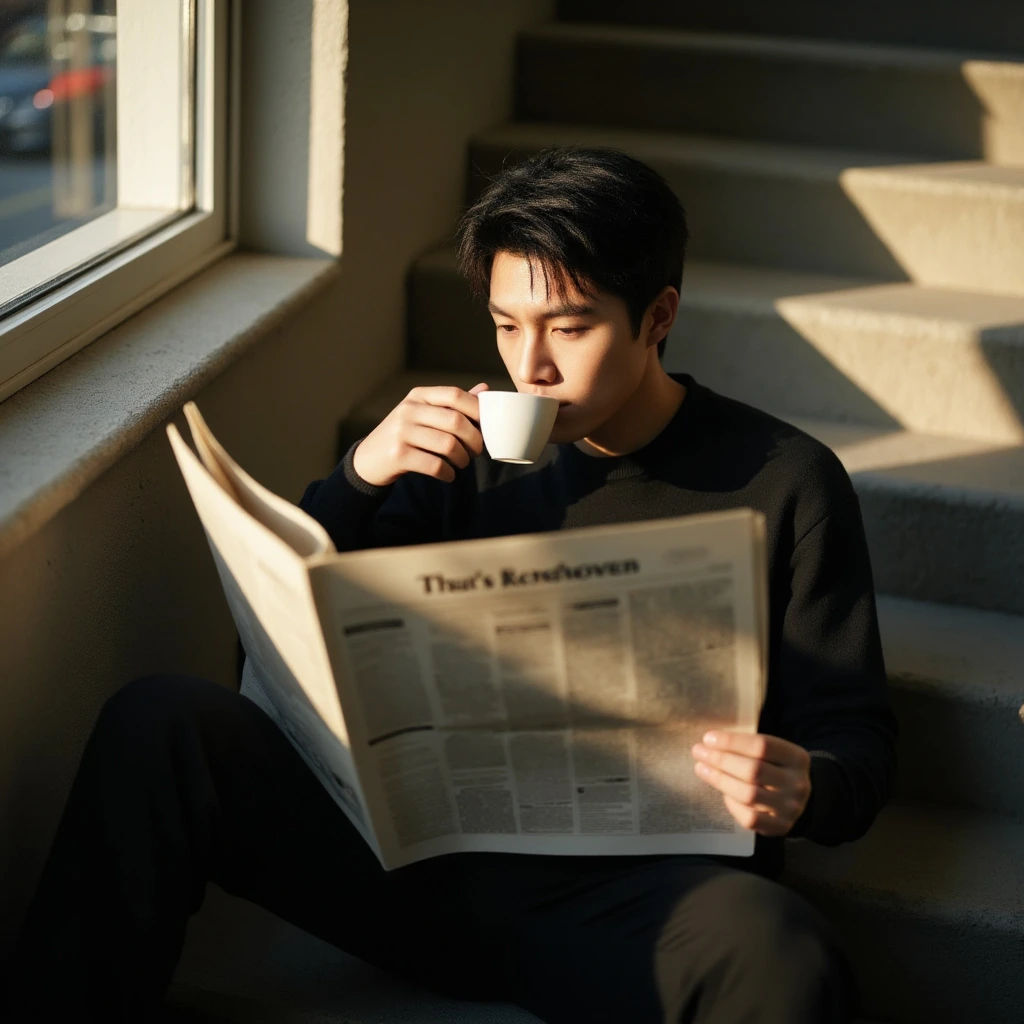

Profile40

Profile40 -

Profile39

Profile39 -

Profile38

Profile38 -

Profile37

Profile37 -

Profile36

Profile36 -

Profile35

Profile35 -

Profile34

Profile34 -

Profile33

Profile33 -

Profile32

Profile32 -

Profile31

Profile31 -

Profile30

Profile30 -

Profile29

Profile29 -

Profile28

Profile28 -

Profile27

Profile27 -

Profile26

Profile26 -

Profile25

Profile25 -

Profile24

Profile24 -

Profile23

Profile23 -

Profile22

Profile22 -

Profile21

Profile21 -

Profile20

Profile20 -

Profile19

Profile19 -

Profile18

Profile18 -

Profile17

Profile17 -

Profile16

Profile16 -

Profile15

Profile15 -

Profile14

Profile14 -

Profile13

Profile13 -

Profile12

Profile12 -

Profile11

Profile11 -

Profile10

Profile10 -

Profile9

Profile9 -

Profile8

Profile8 -

Profile7

Profile7 -

Profile6

Profile6 -

Profile5

Profile5 -

Profile4

Profile4 -

Profile3

Profile3 -

Profile2

Profile2 -

Profile1

Profile1

-

Image 3.1Seedream

Image 3.1Seedream -

Image 3.1Seedream

Image 3.1Seedream -

Image 3.1Seedream

Image 3.1Seedream -

Image 3.1Seedream

Image 3.1Seedream -

FLUX.1 KreaComfyUI

FLUX.1 KreaComfyUI -

FLUX.1 KreaComfyUI

FLUX.1 KreaComfyUI -

FLUX.1 KreaComfyUI

FLUX.1 KreaComfyUI -

Imagen 4ImageFX

Imagen 4ImageFX -

Imagen 3ImageFX

Imagen 3ImageFX -

FLUX.1ComfyUI

FLUX.1ComfyUI -

FLUX.1ComfyUI

FLUX.1ComfyUI -

Imagen 3ImageFX

Imagen 3ImageFX -

FLUX.1ComfyUI

FLUX.1ComfyUI -

FLUX.1ComfyUI

FLUX.1ComfyUI -

FLUX.1ComfyUI

FLUX.1ComfyUI -

FLUX.1ComfyUI

FLUX.1ComfyUI -

Imagen 3ImageFX

Imagen 3ImageFX -

FLUX.1ComfyUI

FLUX.1ComfyUI -

FLUX.1ComfyUI

FLUX.1ComfyUI -

Imagen 3ImageFX

Imagen 3ImageFX -

FLUX.1ComfyUI

FLUX.1ComfyUI -

SDXLComfyUI

SDXLComfyUI -

Imagen 3ImageFX

Imagen 3ImageFX -

FLUX.1ComfyUI

FLUX.1ComfyUI -

FLUX.1ComfyUI

FLUX.1ComfyUI -

FLUX.1ComfyUI

FLUX.1ComfyUI -

FLUX.1ComfyUI

FLUX.1ComfyUI -

FLUX.1ComfyUI

FLUX.1ComfyUI -

FLUX.1ComfyUI

FLUX.1ComfyUI -

FLUX.1ComfyUI

FLUX.1ComfyUI -

FLUX.1ComfyUI

FLUX.1ComfyUI -

FLUX.1ComfyUI

FLUX.1ComfyUI -

FLUX.1ComfyUI

FLUX.1ComfyUI -

FLUX.1 KreaComfyUI

FLUX.1 KreaComfyUI -

FLUX.1 KreaComfyUI

FLUX.1 KreaComfyUI -

FLUX.1ComfyUI

FLUX.1ComfyUI -

SDXLComfyUI

SDXLComfyUI -

FLUX.1ComfyUI

FLUX.1ComfyUI -

FLUX.1ComfyUI

FLUX.1ComfyUI -

FLUX.1ComfyUI

FLUX.1ComfyUI -

FLUX.1ComfyUI

FLUX.1ComfyUI -

SD1.5ComfyUI

SD1.5ComfyUI -

FLUX.1ComfyUI

FLUX.1ComfyUI -

Image 3.1Capcut

Image 3.1Capcut -

FLUX.1ComfyUI

FLUX.1ComfyUI -

FLUX.1ComfyUI

FLUX.1ComfyUI -

SD3ComfyUI

SD3ComfyUI -

FLUX.1ComfyUI

FLUX.1ComfyUI -

SD1.5ComfyUI

SD1.5ComfyUI -

Imagen 3ImageFX

Imagen 3ImageFX -

FLUX.1ComfyUI

FLUX.1ComfyUI -

SDXLComfyUI

SDXLComfyUI -

FLUX.1ComfyUI

FLUX.1ComfyUI -

FLUX.1ComfyUI

FLUX.1ComfyUI -

SDXLComfyUI

SDXLComfyUI -

FLUX.1ComfyUI

FLUX.1ComfyUI -

SDXLComfyUI

SDXLComfyUI